It's been a while since I blogged about my Dell XPS 15 (9550), and one of the remarks there were that the Thunderbolt port was useless because there was no working Dock for it - Dell's TB15 was a fiasco. (One of two issues with the XPS 15 9550 - the other being that the Wireless 1830 card is a piece of horse dung)

Fast forward a few months and Dell has fixed it by releasing a new Dock, the TB16. I ordered the 240 Watt Version, Dell Part 452-BCNU.

First observation is that the 240 Watt Power Supply is absolutely massive, but relatively flat. Not an issue since it's stationary anyway, but if you were considering carrying it around, think again. Also, one important note on the difference between USB-C and Thunderbolt 3. They both have the same connector, but Thunderbolt 3 is four times as fast as USB 3.1 Gen2 - 40 GBit/s compared to 10 GBit/s. This is important when driving two high resolution (4k @ 60 Hz) monitors and a bunch of other peripherals. There are plenty of USB-C docks out there, but not really many Thunderbolt 3 docks, hence it's so important that the TB16 finally arrived.

Update: On the Dell XPS 15 laptop (9550 and 9560), the Thunderbolt 3 port is only connected via two instead of four PCI Express 3.0 lanes, thus limiting the maximum bandwidth to 20 GBit/s. If you were thinking of daisy chaining a potent graphics card (e.g., via a Razer Core) or a fast storage system, that port will be a bottleneck. Though it seems to be useable for 8K video editing, so YMMV.

The promise of Thunderbolt is simple: One single cable from the Notebook to the Dock for absolutely everything. And that's exactly what it delivers. Let's look at the ports.

In the front, there are two USB 3.0 Superspeed ports, and a Headset jack that supports TRRS connections (Stereo Headset and Microphone), along with an indicator light if the laptop is charging.

In the back, we have VGA, HDMI 1.4a, Mini and regular DisplayPort 1.2 for up to 4 external monitors. A Gigabit Ethernet port (technically a USB Ethernet card), Two regular USB 2.0 ports, one USB 3.0 Superspeed port, and a Thunderbolt 3 port for daisy chaining or usable as a regular USB-C port. And last but not least, a regular stereo audio jack for speakers and the power input for the 240 Watt power supply.

Update: I played around with the monitor ports, and it seems that my XPS 15 9550 can only drive 3 monitors at any given time, so either internal Laptop Screen + 2 DP Monitors or 2 DP + 1 HDMI or 2 DP + 1 VGA monitor. The User's Guide says that 3 or 4 monitor configurations should be possible (4 only without the laptop display). I haven't tried too much though, it seems that 3 monitors would require going down to 30 Hz, which is obviously not an option in a real world scenario. Maybe it's possible to do, but for my use case, 2 DP + internal laptop screen is good enough. For anything more, some USB DisplayLink graphics card will do the job.

My setup uses a lot of these ports: Two Monitors (2560x1440 @ 60 Hz resolution) via DisplayPort and mini DisplayPort, Gigabit Ethernet, USB 3.0 to a Hub, both Audio Outputs, and occasionally an external USB 3.0 Hard Drive.

It all connects to the laptop with a single cable, just as promised. And that includes charging, so no need for the laptop power supply.

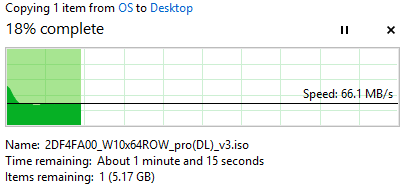

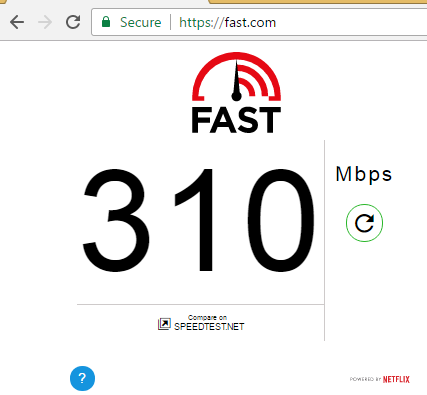

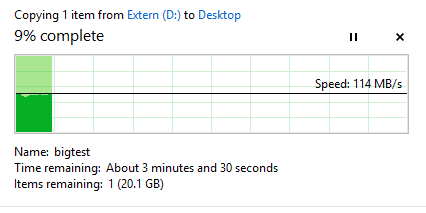

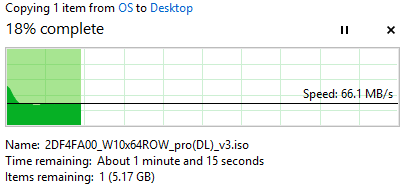

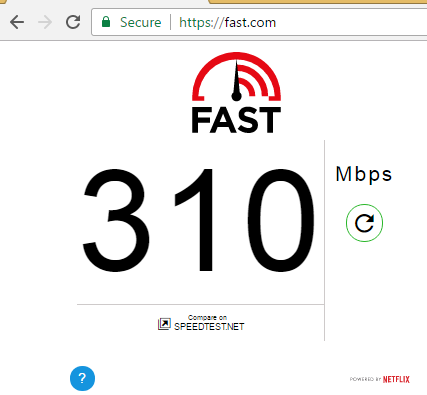

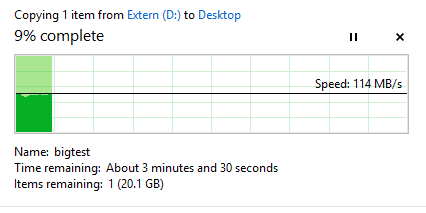

Performance-wise, it's pretty satisfactory. The Ethernet port gets about 70 MB/s (Update: I've seen higher speeds, up to 100 MB/s, so I guess my home server is the bottleneck), and I can make full use of my 300 MBit/s internet connection (humblebrag), something I couldn't do with Wireless. My external hard drive gets 110 MB/s - since it's a 2.5" rotary drive, that might be the limitation of the drive. I haven't tried with two 4K Monitors, but my 2560x1440 screens have no issues with flickering or else. Copying directly from a network share to the USB 3.0 drive also works fine at the same speed (~70 MB/s) without disturbing the monitors.

Make sure to go to Dell's support site and download the latest BIOS, Intel Thunderbolt Controller Driver and Intel Thunderbolt 3 Firmware Update, along with the USB Ethernet, USB Audio and ASMedia USB 3.0 Controller drivers. It's absolutely necessary to do this, since older firmware and driver versions have a lot of issues. FWIW, I'm running 64-Bit Windows 8.1 and everything works perfectly fine - I haven't tested on Windows 10, but assume it'll be fine there as well.

It took way too long and required a lot of patches - but now that it's here and working, I can say that the TB16 is everything Thunderbolt promised. It's expensive, but if Thunderbolt stops changing connectors with every version, I can see the dock lasting a long time, on many future laptops.

March 23rd, 2017 in

Technology | tags:

dell,

Stack Overflow

Here's a few git settings that I prefer to set, now that I actually work on projects with other people and care for a useful history.

git config --global branch.master.mergeoptions "--squash"

This always squashes merges into master. I think that work big enough to require multiple commits should be done in a branch, then squash-merged into master. That way, master becomes a great overview of individual features rather than the nitty-gritty of all the back and forth of a feature.

git config --global pull.rebase true

This always rebases your local commits, or, in plain english: It always puts your local, unpushed commits to the top of the history when you pull changes from the server. I find this useful because if I'm working on something for a while, I can regularly pull in other people's changes without fracturing my history. Yes, this is history-rewriting, but I care more for a useful rather than a "pure" history.

Combined with git repository hosting (GitLab, GitHub Enterprise, etc.), I found that browsing history is a really useful tool to keep up code changes (especially across timezones), provided that the history is actually useful.

December 15th, 2016 in

Development | tags:

git

Setting up IIS usually results in some error (403, 500...) at first. Since I run into this a lot and always forget to write down the steps, here's my cheatsheet now, which I'll update if I run into additional issues.

Folder Permissions

- Give

IIS_IUSRS Read permission to the folder containing your code.

- If StaticFile throws a 403 when accessing static files, also give

IUSR permission

- Make sure files aren't encrypted (Properties => Advanced)

If your code modifies files in that folder (e.g., using the default identity database or logging, etc.), you might need write permissions as well.

Registering ASP.net with IIS

If installing .net Framework after IIS, need to run one of these:

- Windows 7/8/2012:

C:\Windows\Microsoft.NET\Framework64\v4.0.30319\aspnet_regiis.exe -i

- Windows 10:

dism /online /enable-feature /all /featurename:IIS-ASPNET45

October 21st, 2016 in

Uncategorized

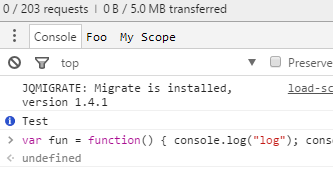

For a lot of Front End JavaScript work, our browser has become the de-facto IDE thanks to powerful built-in tools in Firefox, Chrome or Edge. However, one area that has seen little improvement over the years is the console.log function.

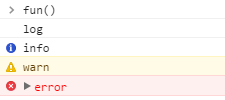

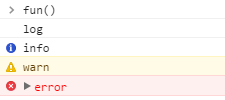

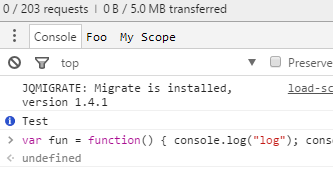

Nowadays, I might have 8 or 9 different modules in my webpage that all output debug logs when running non-minified versions to help debugging. Additionally, it is also my REPL for some ad-hoc coding. This results in an avalanche of messages that are hard to differentiate. There are a few ways to make things stand out. console.info, console.warn and console.error are highlighted in different ways:

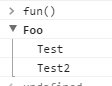

Additionaly, there's console.group/.groupEnd but that requires you to wrap all calls inside calls to .group(name) and .groupEnd()

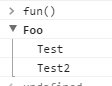

The problem is that there is no concept of scope inherent to the logger. For example, it would be useful to create either a scoped logger or pass in a scope name:

console.logScoped("Foo", "My Log Message");

console.warnScoped("Foo", "A Warning, oh noes!");

var scoped = console.createScope("Foo");

scoped.log("My Log Message");

scoped.warn("A Warning, oh noes!");

This would allow Browsers to create different sinks for their logs, e.g., I could have different tabs:

From there, we can even think about stuff like "Write all log messages with this scope to a file" and then I could browse the site, test out functionality and then check the logfile afterwards.

Of course, there are a few issues to solve (Do we support child scopes? Would we expose sinks/output redirection via some sort of API?), but I think that it's time to have a look at how to turn the console.log mechanism from printf-style debugging into something a lot closer to a dev logging facility.

September 2nd, 2016 in

Uncategorized

This is a bit of a follow up to my 3 year old post about LINQ 2 SQL and my even older 2011 Thoughts on ORM post. I've changed my position several times over the last three years as I learned about issues with different approaches. TL;DR is that for reads, I'd prefer handwritten SQL and Dapper for .Net as a mapper to avoid having to deal with DataReaders. For Inserts and Updates, I'm a bit torn.

I still think that L2S is a vastly better ORM than Entity Framework if you're OK with solely targeting MS SQL Server, but once you go into even slightly more complex scenarios, you'll run into issues like the dreaded SELECT N+1 far too easily. This is especially true if you pass around entities through layers of code, because now virtually any part of your code (incl. your View Models or serialization infrastructure) might make a ton of additional SQL calls.

The main problem here isn't so much detecting the issue (Tools like MiniProfiler or L2S Prof make that easy) - it's that fixing the issue can result in a massive code refactor. You'd have to break up your EntitySets and potentially create new business objects, which then require a bunch of further refactorings.

My strategy has been this for the past two years:

- All Database Code lives in a Repository Class

- The Repository exposes Business-objects

- After the Repository returns a value, no further DB Queries happen without the repository being called again

All Database Code lives in a Repository Class

I have one or more classes that end in Repository and it's these classes that implement the database logic. If I need to call the database from my MVC Controller, I need to call a repository. That way, any database queries live in one place, and I can optimize the heck out of calls as long as the inputs and outputs stay the same. I can also add a caching layer right there.

The Repository exposes Business-objects

If I have a specialized business type (say AccountWithBalances), then that's what the repository exposes. I can write a complex SQL Query that joins a bunch of tables (to get the account balances) and optimize it as much as I want. There are scenarios where I might have multiple repositories (e.g., AccountRepository and TransactionsRepository), in which case I need to make a judgement call: Should I add a "Cross-Entity" method in one of the repositories, or should I go one level higher into a service-layer (which could be the MVC Controller) to orchestrate?

After the Repository returns a value, no further DB Queries happen without the repository being called again

But regardless how I decide on where the AccountWithBalances Getter-method should live, the one thing that I'm not going to do is exposing a hot database object. Sure, it sounds convenient to get an Account and then just do var balance = acc.Transactions.Sum(t => t.Amount); but that will lead to 1am debugging sessions because your application broke down once more than two people hit it at the same time.

At the end, long term maintainability suffers greatly otherwise. It seems that it's more productive (and it is for simple apps), but once you get a grip on T-SQL you're writing your SQL Queries anyway, and now you don't have to worry about inefficient SQL because you can look at the Query Execution Plan and tweak. You're also not blocked from using optimized features like MERGE, hierarchyid or Windowing Functions. It's like going from MS SQL Server Lite to the real thing.

So raw ADO.net? Nah. The problem with that is that after you wrote an awesome query, you now have to deal with SqlDataReaders which is not pleasant. Now, if you were using LINQ 2 SQL, it would map to business objects for you, but it's slow. And I mean prohibitively so. I've had an app that queried the database in 200ms, but then took 18 seconds to map that to .net objects. That's why I started using Dapper (Disclaimer: I work for Stack Overflow, but I used Dapper before I did). It doesn't generate SQL, but it handles parameters for me and it does the mapping, pretty fast actually.

If you know T-SQL, this is the way I'd recommend going because the long-term maintainability is worth it. And if you don't know T-SQL, I recommend taking a week or so to learn the basics, because long-term it's in your best interest to know SQL.

But what about Insert, Update and Delete?

Another thing that requires you to use raw SQL is Deletes. E.g., "delete all accounts who haven't visited the site in 30 days" can be expressed in SQL, but with an ORM you can fall into the trap of first fetching all those rows and then deleting them one by one, which is just nasty.

But where it gets really complicated is when it comes to foreign key relationships. This is actually a discussion we had internally at Stack Overflow, with good points on either side. Let's say you have a Bank Account, and you add a new Transaction that also has a new Payee (the person charging you money, e.g., your Mortgage company). The schema would be designed to have a Payees table (Id, Name) and a Transactions table (Id, Date, PayeeId, Amount) with a Foreign Key between Transactions.PayeeId and Payees.Id. In our example, we would have to insert the new Payee first, get their Id and then create a Transaction with that Id. In SQL, I would probably write a Sproc for this so that I can use a Merge statement:

CREATE PROCEDURE dbo.InsertTransaction

@PayeeName nvarchar(100),

@Amount decimal(19,4)

AS

BEGIN

SET NOCOUNT ON;

DECLARE @payeeIdTable TABLE (Id INTEGER)

DECLARE @payeeId INT = NULL

MERGE dbo.Payees AS TGT

USING (VALUES (@PayeeName)) AS SRC (Name)

ON (TGT.Name = SRC.Name)

WHEN NOT MATCHED BY TARGET THEN

INSERT(Name) VALUES (SRC.Name)

WHEN MATCHED THEN

UPDATE SET @payeeId = TGT.Id

OUTPUT Inserted.Id INTO @payeeIdTable

;

IF @payeeId IS NULL

SET @payeeId = (SELECT TOP 1 Id FROM @payeeIdTable)

INSERT INTO dbo.Transactions (Date, PayeeId, Amount)

VALUES (GETDATE(), @payeeId, @Amount)

END

GO

The problem is that once you have several Foreign Key relationships, possibly even nested, this can quickly become really complex and hard to get right. In this case, there might be a good reason to use an ORM because built-in object tracking makes this a lot simpler. But this is the perspective of someone who never had performance problems doing INSERT or UPDATE, but plenty of problems doing SELECTs. If your application is INSERT-heavy, hand rolled SQL is the way to go - if only just because all these dynamic SQL queries generated by an ORM don't play well with SQL Server's Query Plan Cache (which is another reason to consider stored procedures (sprocs) for Write-heavy applications)

Concluding this, there are good reasons to use ORMs for developer productivity (especially if you don't know T-SQL well) but for me, I'll never touch an ORM for SELECTs again if I can avoid it.

August 24th, 2016 in

Uncategorized

I have a custom DNS Server (running Linux) and I also have a server running Linux ("MyServer"). The DNS Server has an entry in /etc/hosts for MyServer.

On my Windows machines, I can nslookup MyServer and get the IP back, but when I try to access the machine through ping or any of the services it offers, the name doesn't resolve. Access via the IP Address works fine though.

What's interesting is that if I add a dot at the end (ping MyServer.) then it suddenly works. What's happening?!

What's happening is that Windows doesn't use DNS but NetBIOS for simple name resolution. nslookup talks to the DNS Server, but anything else doesn't use DNS.

The trick was to install Samba on MyServer, because it includes a NetBIOS Server (nmbd). On Ubuntu 16.04, just running sudo apt-get install Samba installs and auto-starts the service, and from that moment on my Windows machines could access it without issue.

There are ways to not use NetBIOS, but I didn't want to make changes on every Windows client (since I'm using a Domain), so this was the simplest solution I could find. I still needed entries in my DNS Server so that Mac OS X can resolve it.

August 19th, 2016 in

Development,

Technology | tags:

DNS,

Linux,

Windows

As Nick Craver explained in his blog posting about our deployment process, we deploy Stack Overflow to production 5-10 times a day. Apart from the surrounding tooling (automated builds, one-click deploys, etc.) one of the reasons that is possible is because the master-branch rarely ever stays stale - we don't feature branch a lot. That makes for few merge-nightmares or scenarios where suddenly a huge feature gets dropped into the codebase all at once.

The thing that made the whole "commit early, commit often" principle click for me was how easy it is to add new feature toggles to Stack Overlow. Feature Toggles (or Feature Flags), as described by Martin Fowler make the application [use] these toggles in order to decide whether or not to show the new feature.

The Stack Overflow code base contains a Site Settings class with (as of right now) 1302 individual settings. Some of these are slight behavior changes for different sites (all 150+ Q&A sites run off the same code base), but a lot of them are feature toggles. When the new IME Editor was built, I added another feature toggle to make it only active on a few sites. That way, any huge issue would've been localized to a few sites rather than breaking all Stack Exchange sites.

Feature toggles allow for a half-finished feature to live in master and to be deployed to production - in fact, I can intentionally do that if I want to test it with a limited group of users or have our community team try it before the feature gets released network-wide. (This is how the "cancel misclicked flags" feature was rolled out). But most importantly, it allows for changes to constantly go live. If there is any unintended side-effects, we notice it faster and have an easier time locating it as the relative changeset is small. Compare that to some massive merge that might introduce a whole bunch of issues all at once.

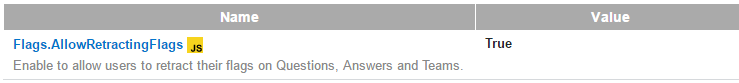

For feature toggles to work, it must be easy to add new ones. When you start out with a new project and want to add your first feature toggle, it may be tempting to just add that one new toggle, but if the code base grows bigger, having an easy mechanism really pays off. Let me show you how I add a new feature toggle to Stack Overflow:

[SiteSettings]

public partial class SiteSettings

{

// ... other properties ...

[DefaultValue(false)]

[Description("Enable to allow users to retract their flags on Questions, Answers and Teams.")]

[AvailableInJavascript]

public bool AllowRetractingFlags { get; private set; }

}

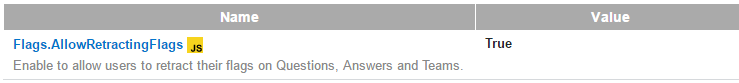

When I recompile and run the application, I can go to a developer page and view/edit the setting:

Anywhere in my code, I can gate code behind an if (SiteSettings.AllowRetractingFlags) check, and I can even use that in JavaScript if I decorate it with the [AvailableInJavascript] attribute (Néstor Soriano added that feature recently, and I don't want to miss it anymore).

Note what I did not have to do: I did not need to create any sort of admin UI, I did not need to write some CRUD logic to persist the setting in the database, I did not need to update some Javascript somewhere. All I had to do was to add a new property with some attributes to a class and recompile. What's even better is that I can use other datatypes than bool - our code supports at least strings and ints as well, and it is possible to add custom logic to serialize/deserialize complex objects into a string. For example, my site setting can be a semi-colon separated list of ints that is entered as 1;4;63;543 on the site, but comes back as an int-array of [1,4,63,543] in both C# and JavaScript.

I wasn't around when that feature was built and don't know how much effort it took, but it was totally worth building it. If I don't want a feature to be available, I just put it behind a setting without having to dedicate a bunch of time to wire up the management of the new setting.

Feature Toggles. Use them liberally, by making it easy to add new ones.

August 8th, 2016 in

Development | tags:

Stack Overflow

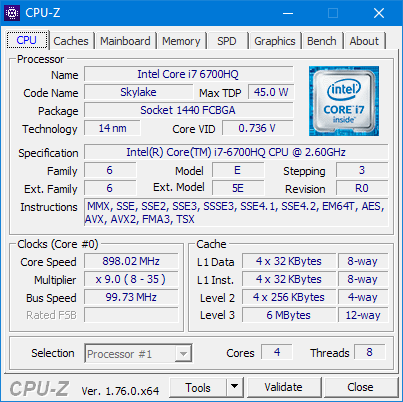

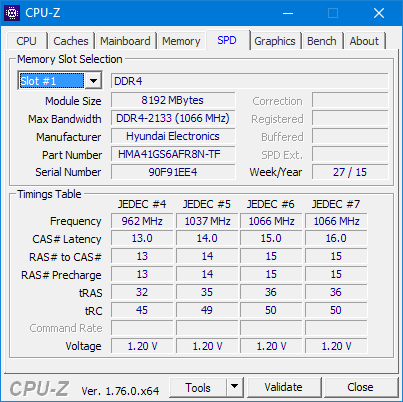

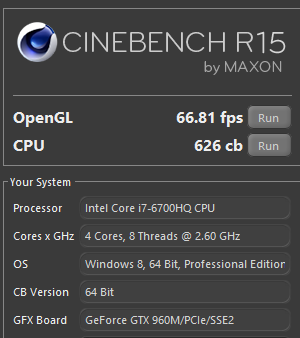

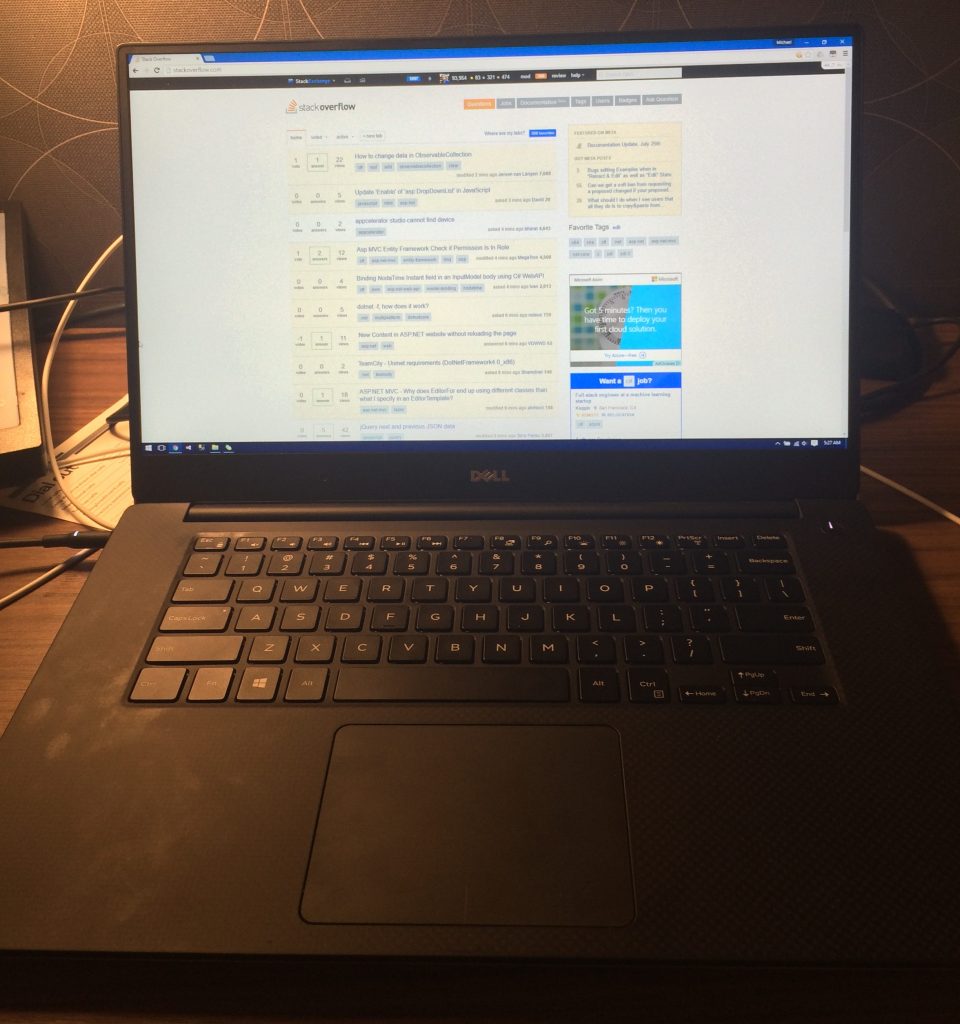

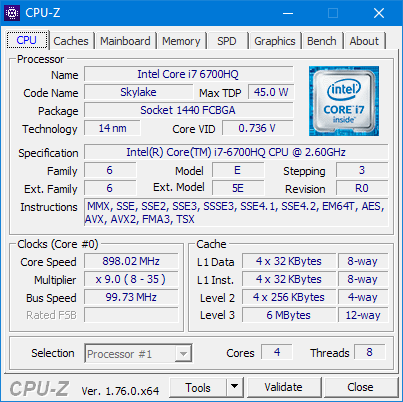

One of the perks of working for Stack Overflow is that you get to choose your own work computer. I decided to go with a 2016 Dell XPS 15 (9550) (not to be confused with the earlier XPS 15, which had the model number 9530) and settled on this configuration:

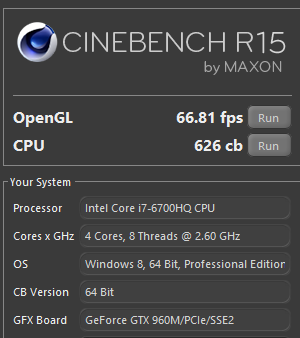

- Core i7-6700HQ CPU (2.6 GHz 4 Core w/ HT, up to 3.5 GHz Turbo)

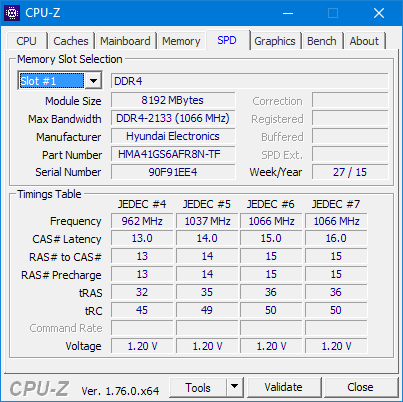

- 16 GB RAM (2x8 GB Dual Channel DDR4, 15 CAS Latency)

- Intel HD Graphics 530 + nVidia GeForce GTX 960M (640 CUDA Cores, 2 GB dedicated GDDR5)

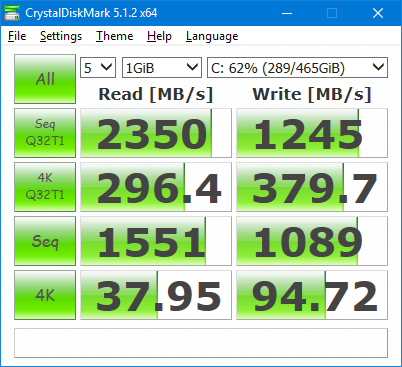

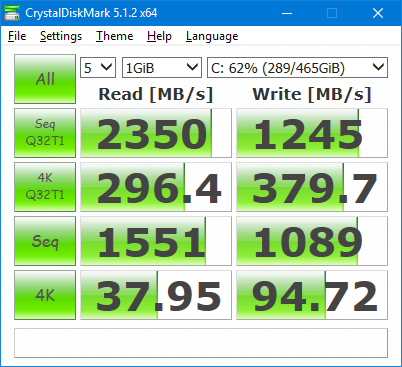

- 512 GB SSD (M.2, PCI Express-based with NVMe. Mine is a Toshiba THNSN5512GPU7, PCI Express Gen 3, 4 Lanes, seems to be an OEM Version of the OCZ RD400. It seems that Dell also uses Samsung SSDs, not sure which one)

- 15" 1920x1080 display

- Windows 10 Pro, 64-Bit

- 2x USB 3.0, 1x USB-C (USB 3.1 Gen2 / 10 Gbps) that supports Thunderbolt 3 (40 Gbps)

One of the big selling features of the XPS 15 is a gorgeous 4K (3840x2160) display - why didn't I get that one? Simple: At 15", I'd have to use Windows display scaling to make stuff not too tiny. Display scaling is a lot better in Windows 10 than it was in 7 or 8, but it's still not great. I have used display scaling on Mac OS X, and on Windows it's still just a giant crutch. So I decided on the normal 1080p display, and I like it a lot. It's bright, it's IPS and thus doesn't suffer from colors being all weird when viewed from the side, and it doesn't kill the battery nearly as much as the 4K screen.

At home, I'm also running two 2560x1440 displays (One Dell U2715H connected via a USB-C-to-DisplayPort adapter, and one Dell U2515H connected via HDMI).

The external display situation is a bit weird at the moment (Late July 2016). The XPS 15 has a Thunderbolt port, so supporting 2x 4K monitors at 60Hz each should be possible using the TB15 thunderbolt dock. The problem is that the Thunderbolt dock doesn't work properly and is currently not sold (Dell might have a fix sometime in August). There have been 3 or 4 Thunderbolt BIOS updates over the last few weeks, but as it stands right now, unless you have a Thunderbolt display the port doesn't do much.

It can be used as a normal USB-C port and drive a single 4K screen at 60 Hz with the DisplayPort adapter cable, and that works fine. Dell does have a USB-C dock (Dell WD15) which has HDMI and Display Port, but unlike the built-in HDMI port, it cannot drive a 2560x1440 screen over HDMI.

That basically means that unless you're only using 1080p screens, there isn't a good dock out there and you're better off connecting external screens directly to the laptop. I have tried daisy chaining the two 2560x1440 displays (2nd display into 1st using display port cable, 1st into USB-C port using DP-USBC cable) and that worked fine

The keyboard is surprisingly good. It's still a laptop keyboard, but it's normal sized keys with enough travel to not feel strange. Because it's a 15" laptop and the keyboard is towards the screen though, my arm is resting on the bottom edge of the laptop which isn't the most comfortable position. It's one of the sacrifices to be made. The covering is some rubbery material that feels good, but finger-stains are readily visible.

The touchpad is pretty good, as close to a Macbook touchpad as I've encountered so far, although it doesn't have the glass cover that makes the Macbook feel frictionless. It was definitely one of the reasons I wanted a Dell XPS laptop, because the touchpad is one of the main reasons to buy an Apple laptop, and I feel that there's no need to regret not getting one.

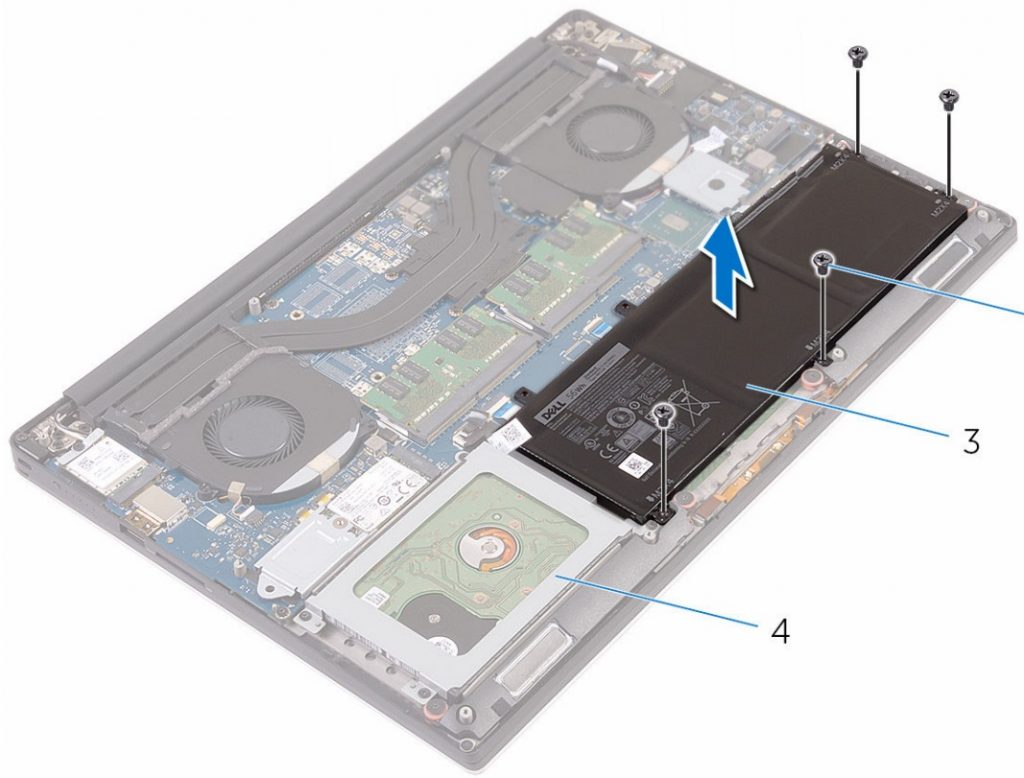

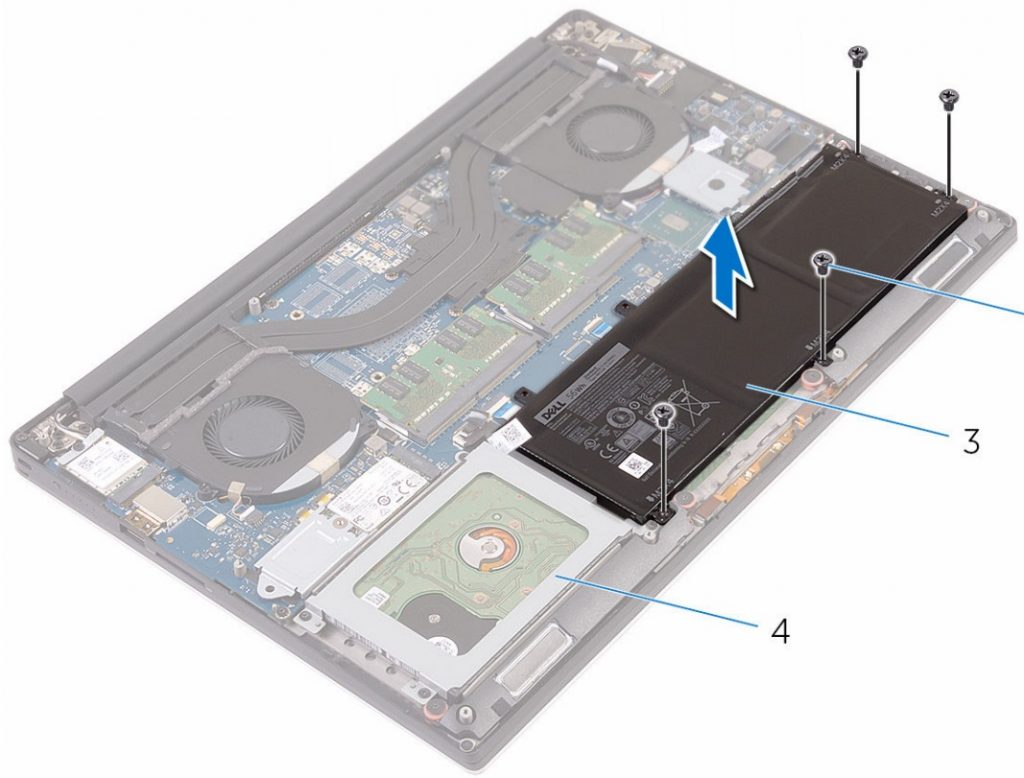

The battery runtime is pretty good. There are two Battery choices: a 56 WHr and an 84 WHr. The battery situation is a bit interesting: Basically the lower-end models come with a 2.5" S-ATA hard drive and a 32 GB M.2 SSD:

The higher-end models come with only a M.2 SSD and either a 56 or 84 WHr battery. In case of the 84 WHr battery, it takes up the space that the 2.5" hard drive would take, and so it's not possible to add an additional 2.5" hard drive to an XPS 15 with a 84 WHr battery (in case you were thinking of adding a second hard drive for data).

In theory, it is possible to add a 2.5" drive to a model with the 56 WHr battery, but no mounting hardware is included and it seems Dell doesn't sell it individually. So if you're really thinking of putting in two hard drives (say, a dream configuration of a 1 TB Samsung SM961 and a 4 TB Samsung 850 EVO), you'd have to buy the XPS 15 in a configuration that includes a hard drive and swap them out.

Battery runtime with the 84 WHr battery is pretty good - Dell makes some lofty claims of 17 hour runtime that of course aren't reached in real world use, but I get at least 6 hours out if my normal use (WiFi enabled, nothing connected to USB, display to about 60% brightness, Visual Studio, SQL Server, IIS, no video streaming). The i7 uses a bit more power than the Core i5-6300HQ that is also offered, but not much more since they are both Quad-Cores, the i7 basically just adding Hyper-Threading. (There is also a model with a Core i3-6100H CPU but honestly, I'd get at least the i5). The 4K Infinity Display apparently really drains the battery from what others have said

Overall, after using the laptop for about 5 weeks both as a stationary computer (external displays, keyboard and mouse) and as a portable, I'm highly satisfied with it. Thunderbolt woes aside, it's insides are up-to-date with a Skylake-CPU, a PCI Express NVMe SSD, a really good IPS display, pretty much the best Wintel touchpad out there and USB-C. Literally the only other Windows laptop I would look at is the XPS 13 in for something a bit smaller. With laptops like these, comparing it to the Macbook Pro is always a hot topic, despite the (as of July 31) MBP's really outdated hardware. For me, it boiled down to the question if I needed to run Mac OS X, and since I have two Macs already (Late 2010 Mac Pro, 2015 Retina Macbook) the answer was "no", and thus the Dell XPS 15 won out. So far, I do not regret that decision.

(Note that BitLocker is enabled, which may skew results downward a bit)

August 3rd, 2016 in

Hardware | tags:

Stack Overflow

Stack Overflow has been expanding past the English-speaking community for a while, and with the launch of both a Japanese version of Stack Overflow and a Japanese Language Stack Exchange (for English speakers interested in learning Japanese) we now have people using IME input regularly.

For those unfamiliar with IME (like I was a week ago), it's an input help where you compose words with the help of the operating system:

In this clip, I'm using the cursor keys to go up/down through the suggestions list, and I can use the Enter key to select a suggestion.

The problem here is that doing this actually sends keyup and keydown events, and so does pressing Enter. Interestingly enough, IME does not send keypress events. Since Enter also submits Comments on Stack Overflow, the issue was that selecting an IME suggestion also submits the comment, which was hugely disruptive when writing Japanese.

Browsers these days emit Events for IME composition, which allowed us to handle this properly now. There are three events: compositionstart, compositionupdate and compositionend.

Of course, different browsers handle these events slightly differently (especially compositionupdate), and also behave differently in how they treat keyboard events.

- Internet Explorer 11, Firefox and Safari emit a

keyup event after compositionend

- Chrome and Edge do not emit a

keyup event after compositionend

- Safari additionally emits a

keydown event (event.which is 229)

So the fix is relatively simple: When you're composing a Word, we should not have Enter submit the form. The tricky part was really just to find out when you're done composing, which requires swallowing the keyup event that follows compositionend on browsers that emit it, without requiring people on browsers that do not emit the event to press Enter an additional time.

The code that I ended up writing uses two boolean variables to keep track if we're currently composing, and if composition just ended. In the latter case, we swallow the next keyup event unless there's a keydown event first, and only if that keydown event is not Safari's 229. That's a lot of if's, but so far it seems to work as expected.

submitFormOnEnterPress: function ($form) {

var $txt = $form.find('textarea');

var isComposing = false; // IME Composing going on

var hasCompositionJustEnded = false; // Used to swallow keyup event related to compositionend

$txt.keyup(function(event) {

if (isComposing || hasCompositionJustEnded) {

// IME composing fires keydown/keyup events

hasCompositionJustEnded = false;

return;

}

if (event.which === 13) {

$form.submit();

}

});

$txt.on("compositionstart",

function(event) {

isComposing = true;

})

.on("compositionend",

function(event) {

isComposing = false;

// some browsers (IE, Firefox, Safari) send a keyup event after

// compositionend, some (Chrome, Edge) don't. This is to swallow

// the next keyup event, unless a keydown event happens first

hasCompositionJustEnded = true;

})

.on("keydown",

function(event) {

// Safari on OS X may send a keydown of 229 after compositionend

if (event.which !== 229) {

hasCompositionJustEnded = false;

}

});

},

Here's a jsfiddle to see the keyboard events that are emitted.

June 24th, 2016 in

Development | tags:

Stack Overflow

One of the hidden useful gems in the .net Framework is the System.Security.Cryptography.Xml.SignedXml class, which allows to sign XML documents, and validate the signature of signed XML documents.

In the process of implementing both a SAML 2.0 Service Provider library and an Identity Provider, I found that RSA-SHA256 signatures are common, but not straight forward. Validating them is relatively easy, add a reference to System.Deployment and run this on app startup:

CryptoConfig.AddAlgorithm(

typeof(RSAPKCS1SHA256SignatureDescription),

"http://www.w3.org/2001/04/xmldsig-more#rsa-sha256");

However, signing documents with a RSA-SHA256 private key yields a NotSupportedException when calling SignedXml.ComputeSignature(). Turns out that only .net Framework 4.6.2 will add support for the SHA2-family:

X509 Certificates Now Support FIPS 186-3 DSA

The .NET Framework 4.6.2 adds support for DSA (Digital Signature Algorithm) X509 certificates whose keys exceed the FIPS 186-2 limit of 1024-bit.

In addition to supporting the larger key sizes of FIPS 186-3, the .NET Framework 4.6.2 allows computing signatures with the SHA-2 family of hash algorithms (SHA256, SHA384, and SHA512). The FIPS 186-3 support is provided by the new DSACng class.

Keeping in line with recent changes to RSA (.NET Framework 4.6) and ECDsa (.NET Framework 4.6.1), the DSA abstract base class has additional methods to allow callers to make use of this functionality without casting.

After updating my system to the 4.6.2 preview, signing XML documents works flawlessly:

// exported is a byte[] that contains an exported cert incl. private key

var myCert = new X509Certificate2(exported);

var certPrivateKey = myCert.GetRSAPrivateKey();

var doc = new XmlDocument();

doc.LoadXml("<root><test1>Foo</test1><test2><bar baz=\"boom\">Real?</bar></test2></root>");

var signedXml = new SignedXml(doc);

signedXml.SigningKey = certPrivateKey;

Reference reference = new Reference();

reference.Uri = "";

XmlDsigEnvelopedSignatureTransform env = new XmlDsigEnvelopedSignatureTransform();

reference.AddTransform(env);

signedXml.AddReference(reference);

signedXml.ComputeSignature();

XmlElement xmlDigitalSignature = signedXml.GetXml();

doc.DocumentElement.AppendChild(doc.ImportNode(xmlDigitalSignature, true));

// doc is now a Signed XML document

May 19th, 2016 in

Development