Adding multiple devices to one PCI Express Slot

Most ATX Mainboards have somewhere between 3 and 5 PCI Express slots. Generally, an x16, x8 and some x1's, but if you have e.g., a Threadripper X399 board you might have several x8 and x16 slots.

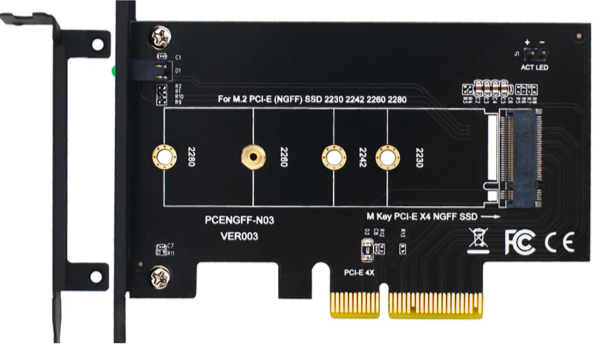

But what if you want more devices than you have slots? Let's say you want a whole bunch of M.2 NVMe drives, which are only x4 each. Ignoring any motherboard M.2 slots, you can use adapter cards that just adapt the different slots:

But now you're wasting an entire x16 slot for just one x4 card. Worse, if you're out of x16/x8 slots and have to use a x1 slot, you're not running at proper speeds. And what's the point of having 64 PCI Express Lanes on a Threadripper CPU if you can't connect a ton of devices at full speed to it?

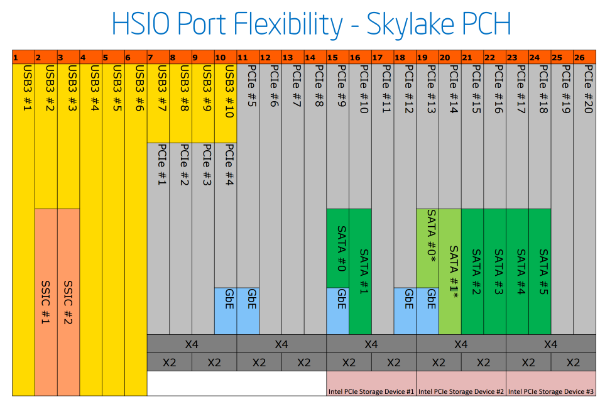

The thing is that PCI Express lanes can be combined in different ways. If a CPU has 16 PCI Express lanes, a mainboard manufacturer could in theory put 16 x1 Slots on the board (like some mining boards), or 1 x16 slot. There are some limitations, and chipsets offer their own additional PCI Express lanes. See for example this diagram from Anandtech/Intel:

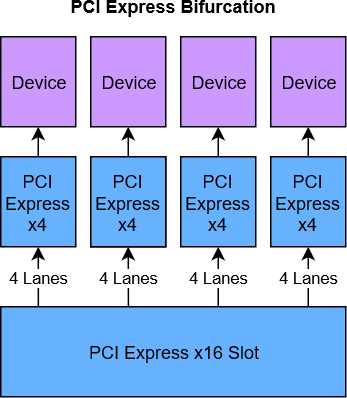

So, to circle back to the topic: Can we just connect more than one device to a PCI Express slot? After all, treating a PCI Express x16 slot as 4 individual x4 slots would solve the NVMe problem, as this would allow 4 NVMe on a single x16 slot with no speed penalty.

And it turns out, this option exists. Actually, there are multiple options.

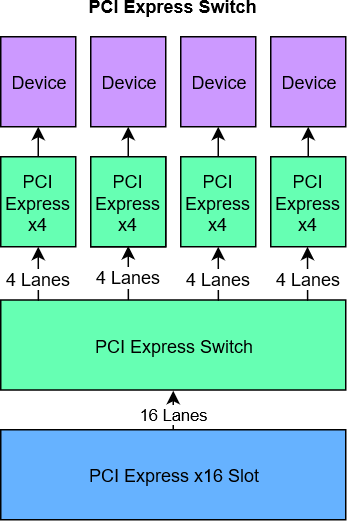

Using a PCI Express Switch

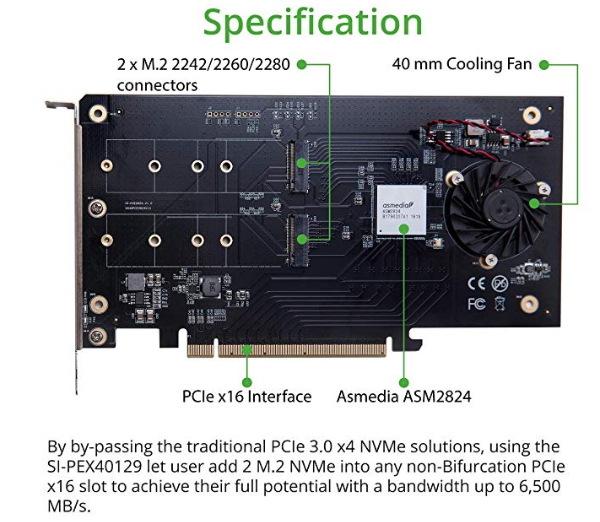

Like a Network Switch, a PCI Express Switch connects multiple PCI Express devices to a shared bus. A popular example is the ASM2824.

The advantage of a PCI Express Switch is that it's compatible with pretty much any mainboard and are thus a great option - if it weren't for the price. A Quad NVMe card with a PCI Express Switch costs around $200 at the moment.

I do not know if there are any performance deficits, though assuming you're using a PCI Express x16 slot, you should be fine. It should be possible to use these cards in x8, x4, and even x1 slots, though of course now your 16 NVMe lanes have to go through the bottleneck that's the slot.

Using Bifurcation

Beef-o-what? From Wiktionary:

Verb

bifurcate (third-person singular simple present bifurcates, present participle bifurcating, simple past and past participle bifurcated)

(intransitive) To divide or fork into two channels or branches.

(transitive) To cause to bifurcate.

Synonyms

branch, fork

Bifurcation is the act in which you take 4 lanes of an x16 slot and turn them into a separate x4 slot, then take another 4 lanes and turn it into another x4 slot, etc.

In theory, you would be able to split an x16 slot into 16 individual x1 slots, or into 1x4, 1x8, and 4x1 though I'm not aware of any mainboard that supports this.

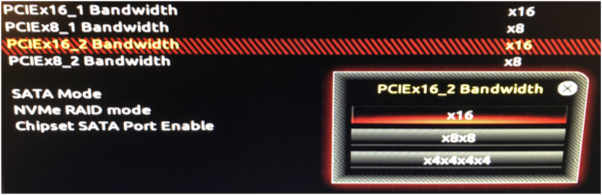

And that's the important part here: The mainboard needs to support this. There would need to be an option somewhere to tell the mainboard how to bifurcate the slot. Here's an example from the BIOS of the X399 AORUS Pro:

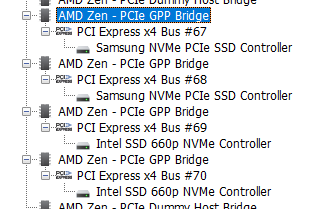

This board offers to bifurcate an x16 slot into either 1x16, 2x8, or 4x4. For x8 slots, I can choose 1x8 or 2x4. With this, I can use a card like the ASUS Hyper M.2 X16 Card V2, which is basically 4 of those NVMe-PCI-Express cards on one PCB.

The card itself has some electronics on it for power regulation, fan control and the like, but no PCI Express Bridge. The system gets presented with 4 individual PCI Express x4 buses.

It's important that bifurcation does not "add" PCI Express lanes like a Switch could - you can't bifurcate an x8 slot into 4x4 slots, and using the above card in such an x8 slot will only show one or two drives (if using x8 or x4x4) mode.

Bifurcation is not usually supported in "consumer" platforms, mainly because there aren't a lot of PCI Express lanes on most CPUs. Intel's flagship Core i9-9900K CPU has 16 total lanes. Sixteen. Total. AMD is a bit more generous on their Ryzen CPUs, offering 24 Lanes (Fewer on APUs). Now, some of those lanes are connected to the Chipset to provide things like SATA, USB, OnBoard Sound and Networking. With your graphics card (likely x16), an NVMe boot SSD (x4), you're already somewhere in the realm of 28 PCI Express lanes, though of course because they don't always use the full bandwidth, the PCI Express Switch in the CPU can handle this without performance loss.

But still, with so few lanes, Birfurcation doesn't make sense. It does come into play on HEDT Platforms however. AMD's Threadripper boasts 64 PCI Express Lanes, while EPYC has 128 of them. Intel's product lineup is a bit more segregated, with their LGA 2066 platform offering 16, 28, 44, or 48 lanes. Cascade Lake-W offers 64 PCI Express Lanes.

HEDT - more than just more Cores

With AMD now offering 16 Cores at 3.5 GHz in their Ryzen 9 3950X, and Intel bringing the Core i9-9900K to the LGA1151v3 platform, there is a question whether LGA 2066 and Threadripper still make sense. Why pay so much more money on the CPU and Mainboard?

However, as important as Core Count/Speed is in many cases, if you're building a fast storage server with SSD/NVMe Storage and up to 100 Gigabit Ethernet, you need those extra PCI Express lanes.

Four NVMe drives is already 16 PCIe 3.0 Lanes, a single 10 Gigabit Ethernet port would require 2 more PCIe 3.0 Lanes and the chipset also usually takes 4 lanes for its stuff, bringing the total to 22. So you're already scraping the limit of Ryzen Desktop CPUs, without much room for additional growth. SATA and USB are part of the Chipset, but if you want SAS Drives, more/faster network ports, a graphics card, additional NVMe storage - you're back in the land of PCI Express Switches and compromising on bandwidth.

As said before, currently there is an actual choice of CPUs for different use cases. Do you want more cores, or faster cores? Two, Four, Six, or Eight memory channels? NVMe storage and/or RAID? A bleeding edge platform, or one that's around for years but likely on its way out soon?

After a decade of stagnation, Building PCs is fun again!